Answer Validation Exercise

Summary

Participant systems will receive a set of triplets (Question, Answer, Supporting Text) and they must return a boolean value for each triplet. Results will be evaluated against the QA human assessments. See Exercise Description for more details.

Keywords: Answer Validation, Question Answering, Recognising Textual Entailment (RTE)

Related Work: QA at CLEF, RTE Pascal Challenge

Objective

Anselmo Peñas, Álvaro Rodrigo, Valentín Sama, Felisa Verdejo. Testing the Reasoning for Question Answering Validation. Special Issue on Natural Language and Knowledge Representation, Journal of Logic and Computation. To appear (Draft version )

Exercise Description

Languages available (subtasks) There is a subtask for each language involved in QA:

Test data format

where pairs (Answer, Supporting Text) are grouped by question. Systems must consider the question and validate each of these pairs according to the response format: Response format

where all the answers for all questions receive one and only one of the following values:

and the confidence score is in the range [0-1] as usual:

Number of Runs

Number of samples

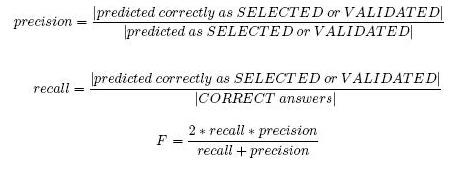

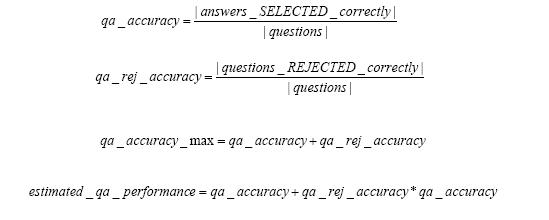

Evaluation

References [1] Anselmo Peñas; Alvaro Rodrigo; Valentin Sama; Felisa Verdejo. Testing the Reasoning for Question Answering Validation. Journal of Logic and Computation 2007 |

Resources

Hypothesis patterns

<question id="0001" type="OBJECT">What is Atlantis</question> Public Spanish Collection A training corpus named SPARTE has been developed from the Spanish assessments produced during 2003, 2004 and 2005 editions of QA@CLEF. SPARTE contains 2804 text-hypothesis pairs from 635 different questions (4.42 average number of pairs per question ). All the pairs have a document label and a TRUE/FALSE value indicating whether the document entails the hypothesis or not. The number of pairs with a validation value equals to TRUE is 676 (24%) and the number of pairs FALSE is 2128 (76%). Notice that the percentage of pairs FALSE is much larger than the percentage of pairs TRUE. We decided to keep this proportion since this is the result of real QA systems submission. A. Peñas, A. Rodrigo, and F. Verdejo. SPARTE, a Test Suite for Recognising Textual Entailment in Spanish In A. Gelbukh, editor, Computational Linguistics and Intelligent Text Processing. CICLing 2006., Lecture Notes in Computer Science, 2006. Public English Collection

|